Introduction to statistical learning solutions unveils the transformative power of data analysis, empowering businesses and organizations to make informed decisions and achieve unprecedented success. Statistical learning, a cornerstone of modern data science, equips practitioners with the tools and techniques to extract meaningful insights from complex datasets, unlocking the potential for innovation and growth.

From healthcare to finance, statistical learning has revolutionized diverse industries, enabling us to identify patterns, predict outcomes, and optimize processes with remarkable accuracy. This comprehensive guide delves into the fundamentals of statistical learning, providing a roadmap for harnessing the power of data to drive strategic decision-making.

1. Introduction to Statistical Learning

Statistical learning is a branch of data analysis that uses statistical methods to extract knowledge from data. It plays a crucial role in modern data analysis, enabling researchers and practitioners to make informed decisions based on large and complex datasets.

There are three main types of statistical learning methods: supervised learning, unsupervised learning, and reinforcement learning.

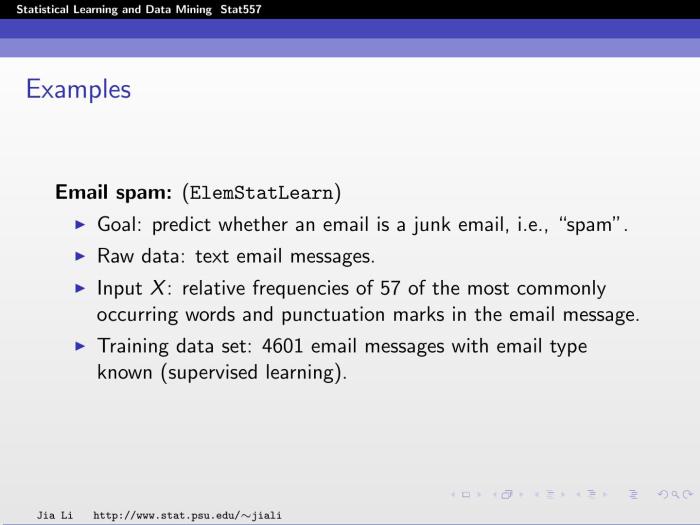

- Supervised learning involves training a model on labeled data, where the model learns to predict the output for new, unseen data.

- Unsupervised learning involves finding patterns and structures in unlabeled data, without any explicit supervision.

- Reinforcement learning involves training a model through interactions with an environment, where the model learns to make decisions that maximize a reward function.

Statistical learning has been successfully employed in a wide range of applications, including:

- Predicting customer churn

- Detecting fraud

- Recommending products

- Analyzing social media data

- Developing self-driving cars

2. Data Preparation and Exploration

Data preparation is a critical step in statistical learning, as it ensures that the data is clean, consistent, and ready for analysis. Common data preparation techniques include:

- Data cleaning: Removing errors, duplicates, and outliers from the data.

- Data transformation: Converting data into a format that is suitable for analysis, such as scaling or normalizing the data.

- Feature engineering: Creating new features from existing data to improve the performance of the model.

Exploratory data analysis (EDA) is a process of exploring and visualizing the data to identify patterns, trends, and relationships. EDA techniques include:

- Univariate analysis: Analyzing the distribution of each variable individually.

- Bivariate analysis: Analyzing the relationship between two variables.

- Multivariate analysis: Analyzing the relationship between multiple variables.

3. Model Selection and Evaluation

Model selection is the process of choosing the best model for a given dataset and problem. Common criteria used for evaluating models include:

- Accuracy: The proportion of correct predictions.

- Precision: The proportion of positive predictions that are correct.

- Recall: The proportion of actual positives that are correctly predicted.

- F1-score: A weighted average of precision and recall.

Model selection techniques include:

- Cross-validation: Splitting the data into multiple subsets and training and evaluating the model on different combinations of these subsets.

- Hyperparameter tuning: Optimizing the hyperparameters of the model, which are parameters that control the learning process.

4. Statistical Modeling Techniques: Introduction To Statistical Learning Solutions

There are many different statistical modeling techniques available, each with its own strengths and weaknesses. Some of the most commonly used techniques include:

| Model | Description | Strengths | Weaknesses |

|---|---|---|---|

| Linear regression | A model that predicts a continuous output variable as a linear combination of input variables. | Simplicity, interpretability | Assumes linearity, can be sensitive to outliers |

| Logistic regression | A model that predicts a binary output variable (0 or 1) as a logistic function of input variables. | Can handle non-linear relationships, interpretability | Assumes independence of observations, can be slow to converge |

| Decision trees | A model that predicts an output variable by recursively splitting the data into smaller subsets based on the values of input variables. | Non-parametric, easy to interpret | Can be unstable, prone to overfitting |

| Support vector machines | A model that predicts an output variable by finding the optimal hyperplane that separates the data into two classes. | Can handle non-linear relationships, robust to noise | Can be computationally expensive, difficult to interpret |

5. Case Studies and Applications

Statistical learning has been successfully applied in a wide range of domains, including:

- Healthcare: Predicting patient outcomes, identifying diseases, and developing new treatments.

- Finance: Predicting stock prices, detecting fraud, and managing risk.

- Marketing: Predicting customer churn, identifying customer segments, and optimizing marketing campaigns.

- Transportation: Optimizing traffic flow, predicting travel times, and developing self-driving cars.

Despite its successes, statistical learning also faces challenges and limitations. These include:

- Data quality: Statistical learning models can only be as good as the data they are trained on.

- Model interpretability: Some statistical learning models can be difficult to interpret, making it challenging to understand the reasons for their predictions.

- Computational complexity: Some statistical learning models can be computationally expensive to train and use.

Despite these challenges, statistical learning remains a powerful tool for extracting knowledge from data. As data continues to grow in volume and complexity, statistical learning will become increasingly important for making informed decisions in a wide range of applications.

FAQ Section

What is statistical learning?

Statistical learning encompasses a range of techniques used to extract knowledge from data, enabling computers to learn from experience without explicit programming.

What are the different types of statistical learning methods?

Common statistical learning methods include supervised learning (predicting outcomes based on labeled data), unsupervised learning (discovering patterns in unlabeled data), and reinforcement learning (optimizing actions through trial and error).

How is statistical learning used in real-world applications?

Statistical learning finds applications in diverse fields, including healthcare (disease diagnosis), finance (fraud detection), and marketing (customer segmentation).